Building an $80,000 Compute Cluster for Hack@UCF

A high-powered playground for students to learn and grow

Background

During my executive tenures at Hack@UCF, I was one of the club representatives responsible for supporting the Lockheed Martin Cyber Lab project.

One of my goals for the project was to propose that some of the funding be reserved for a new, dedicated compute cluster. This cluster would benefit students as a playground for workshops, research, and training for cybersecurity competitions, hosting virtual machines, containers, and other resources for them to use as they see fit.

After more than a year, I was successful in getting an $80,000 line of funding approved by the dean, and assembled a team to design the highest-performance cluster our funding could buy. This post is a collection of the documents and photos from the project.

Results

Deployment of the cluster happened in phases as groups of servers were purchased, built, shipped to us from UCF’s supplier, and then installed.

Behold stage 1 of the deployment: 72+ Cores and 256+ GB of RAM :)

Planning

The Budget

Every project starts with a budget. After some negotiation with the CECS Dean and the UCF Foundation, I was able to secure $80,000 in funding for the project, which gave us a lot of room to work with!

With funding in hand, my team and I were able to put a budget together for the cluster. You can see these below– they includes the cost of the compute nodes, storage node, and networking equipment, as well as the cost of associated accessories like SFP cables, networking, and power distribution equipment.

The Base Cluster Budget

Second Phase Expansion Budget

The Proposal

With our detailed budgets in hand, we were able to assemble a final proposal for the Dean and UCF Foundation. This proposal included a detailed breakdown of the cluster’s specifications, as well as a justification for the cost of the cluster and a plan for how we would manage it.

Governance

Speaking of management, we also had to put together a plan for how we would manage the cluster. This included a proposal for a committee to oversee the cluster’s operations, as well as a plan for how we would handle the cluster’s finances.

A Committe to Manage the Project

We created a dedicated committe to oversee the design and construction of the cluster, and to create the policies and standards that’d allow its stewardship to transition forward as new executive teams and student system administrators took on the responsibility of maintaining it. This committee included myself, the club president, the club treasurer, and other members of the club and our CCDC team.

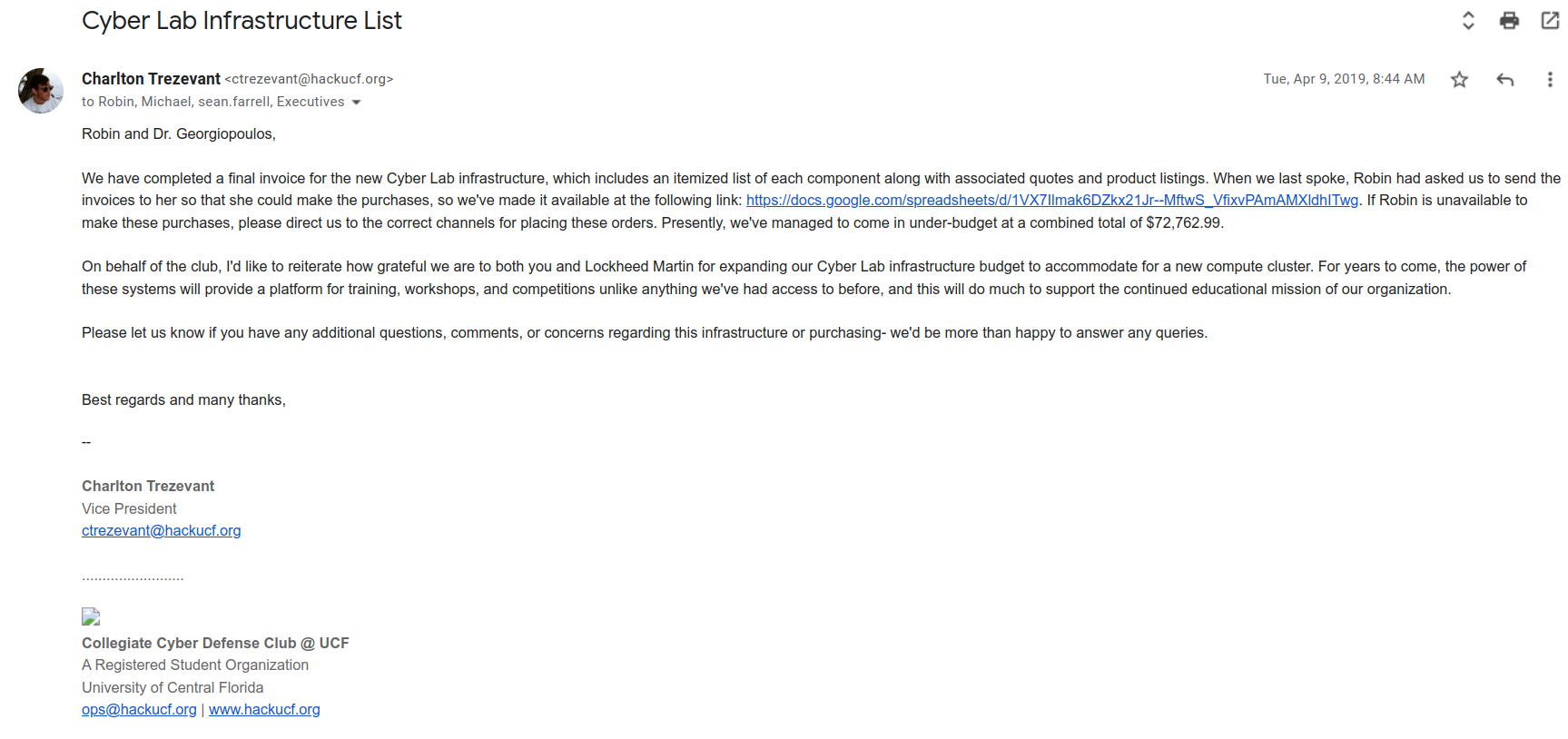

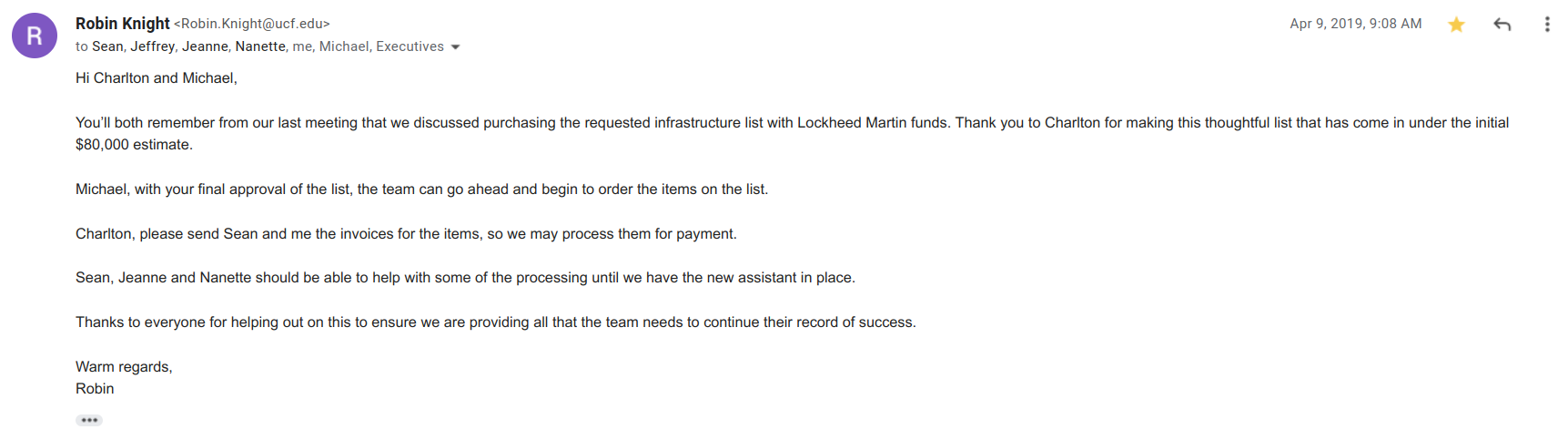

Purchasing Everything

COVID Throws a Wrench in Things

Since this project was funded by the UCF Foundation, we had to go through UCF’s procurement office to purchase the equipment. Unfortunately, the COVID-19 pandemic hit right as we were about to submit our purchase order, and the university froze all non-essential spending. This meant that we had to apply for a special purchasing exemption in order to submit our PO.

Moving Forward

Luckily, our request was approved, and we were able to begin submitting our POs to Silicon Mechanics! We had to go through this process in phases as we navigated the approval and construction processes for each part of the cluster.

Serving the Students

We inaugurated the cluster with a series of planned demonstrations covering offensive, defensive, and forensic/incident response scenarios. These are outlined in the PDF below– an original artifact from our committee’s planning sessions.

Cluster Specifications

The most important invoices for cluster components were broken up into 3 parts: the compute nodes, the storage node, and cache SSDs we added to the storage node later on. I’ve included the PDFs of these invoices below so you can see the specifications for those components alongside their cost breakdown.